Introducing Turing Tensor Cores

Graphics powerhouse NVIDIA announced Turing, its latest GPU architecture. Turing, the company claimed, was a big leap forward in graphics technology. NVIDIA CEO Jensen Huang put it this way: “Turing is NVIDIA’s most important innovation in computer graphics in more than a decade.” He also introduced the Turing GPU, which enables graphics processing using Ray Tracing in real-time. Turing Tensor Cores is Nvidia’s new GPU architecture geared towards AI, Deep Learning, and Ray Tracing.

NVIDIA Turing GPU includes:

- REAL-TIME RAY TRACING IN GAMES: Ray tracing is the definitive solution for lifelike lighting, reflections, and shadows, offering a level of realism far beyond what’s possible using traditional rendering techniques. Turing is the first GPU capable of real-time ray tracing.

- POWERFUL AI-ENHANCED GRAPHICS: Artificial intelligence is driving the greatest technology advancement in history, and Turing is bringing it to computer graphics. Armed with Tensor Cores that deliver AI computing horsepower, Turing GPUs can run powerful AI algorithms in real time to create crisp, clear, lifelike images and special effects that were never before possible.

- NEW ADVANCED SHADING TECHNOLOGIES: Programmable shaders defined modern graphics. Turing GPUs feature new advanced shading technologies that are more powerful, flexible, and efficient than ever before. Combined with GDDR6—the world’s fastest memory—this performance lets you tear through games with maxed-out settings and incredibly high frame rates.

What is Tensor Cores and Turing Tensor Cores? What are Deep Learning Features for Inference? This article will explain those “sophisticated” terms for you.

Turing Tensor Cores

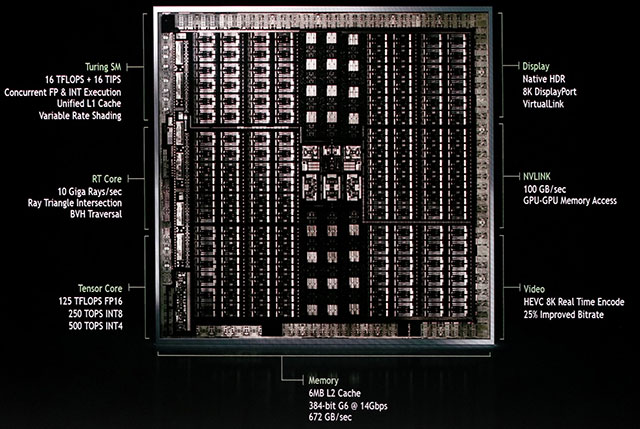

Tensor Cores are specialized execution units designed specifically for performing the tensor / matrix operations that are the core compute function used in Deep Learning. Similar to Volta Tensor Cores, the Turing Tensor Cores provide tremendous speed-ups for matrix computations at the heart of deep learning neural network training and inferencing operations. Turing GPUs include a new version of the Tensor Core design that has been enhanced for inferencing.

NVIDIA Tensor Core technology has brought incredible acceleration to AI, reducing training time from weeks to hours and providing massive acceleration to inference. NVIDIA Turing ™ Tensor Core technology provides multi-precision capabilities for efficient AI inference. Turing Tensor Cores provides a range of rules for deep learning and inference training, from FP32 to FP16 to INT8, as well as INT4, to provide performance breakthroughs for previous NVIDIA GPUs.

Turing Tensor Cores add new INT8 and INT4 precision modes for inferencing workloads that can tolerate quantization and don’t require FP16 precision. Turing Tensor Cores bring new deep learning-based AI capabilities to GeForce gaming PCs and Quadro-based workstations for the first time. A new technique called Deep Learning Super Sampling (DLSS) is powered by Tensor Cores. DLSS leverages a deep neural network to extract multidimensional features of the rendered scene and intelligently combine details from multiple frames to construct a high-quality final image. DLSS uses fewer input samples than traditional techniques such as TAA, while avoiding the algorithmic difficulties such techniques face with transparency and other complex scene elements.

Deep Learning Features for Inference

Turing GPUs deliver exceptional inference performance. The Turing Tensor Cores, along with continual improvements in TensorRT (NVIDIA’s run-time inferencing framework), CUDA, and CuDNN libraries, enable Turing GPUs to deliver outstanding performance for inferencing applications. Turing Tensor Cores also add support for fast INT8 matrix operations to significantly accelerate inference throughput with minimal loss in accuracy. New low-precision INT4 matrix operations are now possible with Turing Tensor Cores and will enable research and development into sub 8-bit neural networks

A great AI inference accelerator not only provides great performance but also the flexibility to accelerate diverse neural networks that allow developers to build a new network. Low latency with high throughput is the most important performance requirement of employing AI Inference. NVIDIA Tensor Cores provides a full range of TF TF32, bfloat16, FP16, INT8, and INT4 to offer maximum flexibility and performance.

Turing GPU TU102 is the highest performance GPU of the Turing GPU series and the RTX 2080Ti card belongs to the Turing GPU series. Turing Tensor Cores provide tremendous acceleration for matrix calculations which is the core of Deep Learning Neural Network Training and Inferencing activities. Therefore, with this Turing GPU architecture, iRender provides customers with specialized machine configurations for AI Inference, AI Training, Deep Learning, VR / AR, … by providing 6/12 cards RTX 2080Ti, 11GB vRAM, customers can choose from a variety of service packs from GPU Cloud for AI/DL.

Register here to try using our services. If you have any questions, please contact us

Source: nvidia.com